...

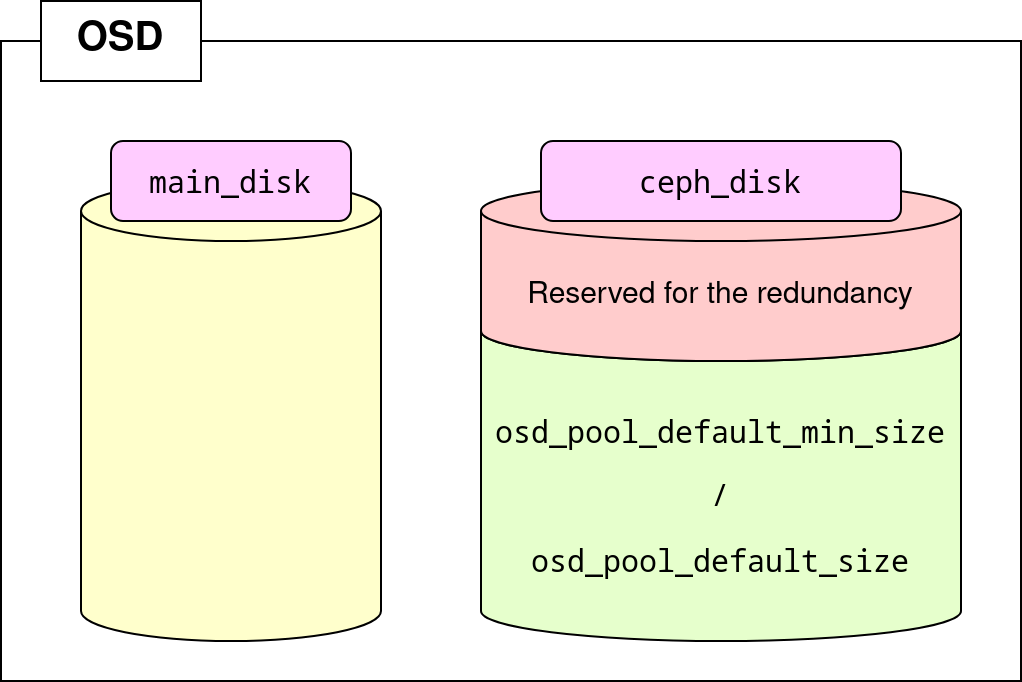

configure_firewall: Boolean to configure the firewall (trueby default)ceph_origin: Origin of the ceph installed files (must be set todistro). SEAPATH installs ceph with an installer (see the installation section)ceph_osd_disk: Device to stock datas (only for ceph-osd hosts), it's on this disk ceph will build a rbd. To success the CI, the path should be in/dev/disk/by-pathcluster_network: Address block to access to cluster networkdashboard_enabled: Boolean to enable a dashboard (must be set tofalse)devices: List of devices to use for the shared storage. All specified devices will be used entirelylvm_volumes: List of volumes to use for the shared storage. To use to replacedevicesto take a part of the devicemonitor_address: Address where the host will bindntp_service_enabled: Boolean to enable the NTP service (must be set tofalse). SEAPATH installs a specific NTP client and configure itosd_pool_default_min_size: Minimal number of available OSD to ensure cluster success (best:ceil(osd_pool_default_size / 2.0))osd_pool_default_size: Number of OSD in the clusterpublic_network: Address block to access to public network

Volumes specifications

If lvm_volumes is defined, the devices variables is ignored.

When a volume is defined for the shared storage, several variables some fields should be set for seapath-ansible and ceph-ansible.

...

mon_osd_min_down_reporters: (must be set to1)osd_crush_chooseleaf_type: (must be set to1)osd_pool_default_min_size: Minimal number of available OSD to ensure cluster success (best:ceil(osd_pool_default_size / 2.0))osd_pool_default_pg_num: (must be set to128)osd_pool_default_pgp_num: (must be set to128)osd_pool_default_size: Number of OSD in the cluster

...

Override mon configuration

...

Ceph provides ansible rules to configure the software, you can read the documentation documentation here.

Warnings

| Note |

|---|

If this step is failed, you must restart it at the previous step. Use snapshot LVM to do this. At end of this step, make sure that:

|

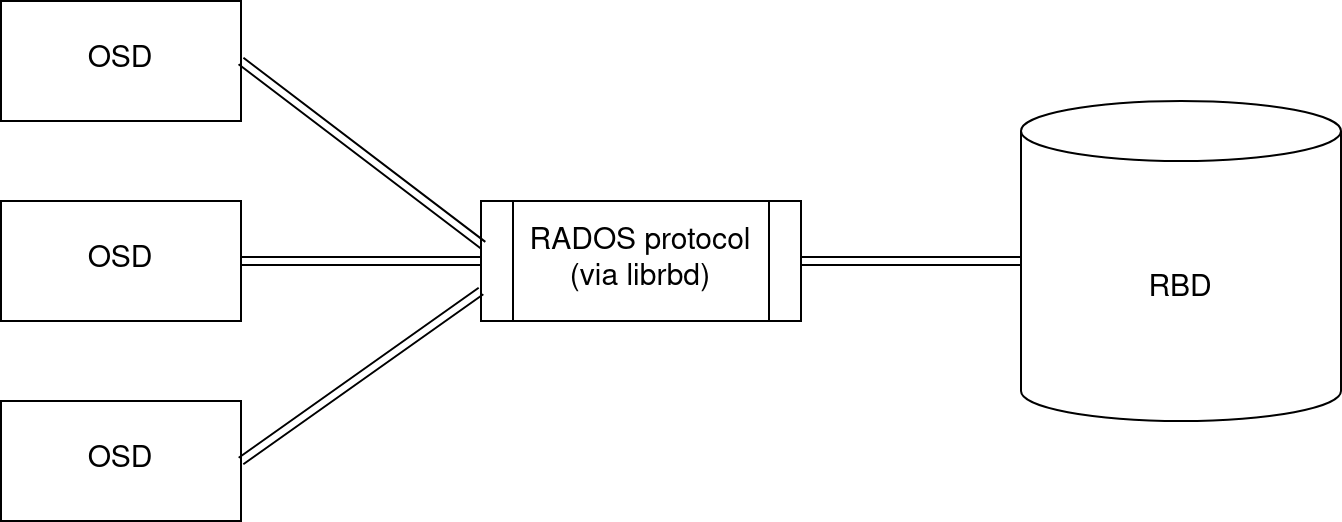

RADOS Block Devices

During this step, ceph will build a RADOS block device (RBD) from ceph_osd_disk. A storage entry (a pool) will be automatically generated for libvirt. When the service will be started, the hypervisor should be used to launch VMs.

...

This disk will be used with the librbd library provided by ceph.

draw.io Diagram

More details here.